Specialization Made Vital

A hospital is overcrowded with specialists and medical doctors every with their very own specializations, fixing distinctive issues. Surgeons, cardiologists, pediatricians—specialists of every kind be part of palms to offer care, usually collaborating to get the sufferers the care they want. We will do the identical with AI.

Combination of Consultants (MoE) structure in synthetic intelligence is outlined as a mixture or mix of various “expert” fashions working collectively to cope with or reply to advanced knowledge inputs. Relating to AI, each skilled in an MoE mannequin focuses on a a lot bigger downside—similar to each physician specializes of their medical area. This improves effectivity and will increase system efficacy and accuracy.

Mistral AI delivers open-source foundational LLMs that rival that of OpenAI. They’ve formally mentioned the usage of an MoE structure of their Mixtral 8x7B mannequin, a revolutionary breakthrough within the type of a cutting-edge Giant Language Mannequin (LLM). We’ll deep dive into why Mixtral by Mistral AI stands out amongst different foundational LLMs and why present LLMs now make use of the MoE structure highlighting its pace, dimension, and accuracy.

Widespread Methods to Improve Giant Language Fashions (LLMs)

To higher perceive how the MoE structure enhances our LLMs, let’s talk about widespread strategies for bettering LLM effectivity. AI practitioners and builders improve fashions by growing parameters, adjusting the structure, or fine-tuning.

- Growing Parameters: By feeding extra info and deciphering it, the mannequin’s capability to study and characterize advanced patterns will increase. Nevertheless, this could result in overfitting and hallucinations, necessitating intensive Reinforcement Studying from Human Suggestions (RLHF).

- Tweaking Structure: Introducing new layers or modules accommodates the growing parameter counts and improves efficiency on particular duties. Nevertheless, modifications to the underlying structure are difficult to implement.

- High-quality-tuning: Pre-trained fashions might be fine-tuned on particular knowledge or by means of switch studying, permitting present LLMs to deal with new duties or domains with out ranging from scratch. That is the best methodology and doesn’t require vital modifications to the mannequin.

What’s the MoE Structure?

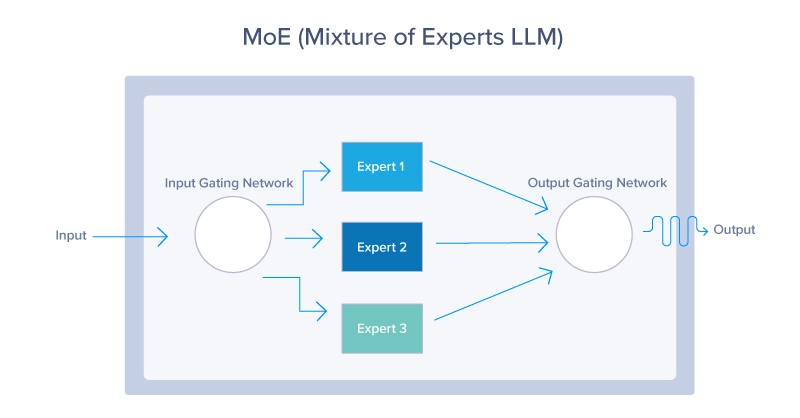

The Combination of Consultants (MoE) structure is a neural community design that improves effectivity and efficiency by dynamically activating a subset of specialised networks, known as specialists, for every enter. A gating community determines which specialists to activate, resulting in sparse activation and decreased computational value. MoE structure consists of two crucial parts: the gating community and the specialists. Let’s break that down:

At its coronary heart, the MoE structure features like an environment friendly site visitors system, directing every car – or on this case, knowledge – to one of the best route based mostly on real-time situations and the specified vacation spot. Every job is routed to probably the most appropriate skilled, or sub-model, specialised in dealing with that individual job. This dynamic routing ensures that probably the most succesful sources are employed for every job, enhancing the general effectivity and effectiveness of the mannequin. The MoE structure takes benefit of all 3 methods the way to enhance a mannequin’s constancy.

- By implementing a number of specialists, MoE inherently will increase the mannequin’s

- parameter dimension by including extra parameters per skilled.

- MoE modifications the basic neural community structure which includes a gated community to find out which specialists to make use of for a delegated job.

- Each AI mannequin has a point of fine-tuning, thus each skilled in an MoE is fine-tuned to carry out as meant for an added layer of tuning conventional fashions couldn’t benefit from.

MoE Gating Community

The gating community acts because the decision-maker or controller inside the MoE mannequin. It evaluates incoming duties and determines which skilled is suited to deal with them. This determination is usually based mostly on realized weights, that are adjusted over time by means of coaching, additional bettering its capability to match duties with specialists. The gating community can make use of varied methods, from probabilistic strategies the place delicate assignments are tasked to a number of specialists, to deterministic strategies that route every job to a single skilled.

MoE Consultants

Every skilled within the MoE mannequin represents a smaller neural community, machine studying mannequin, or LLM optimized for a particular subset of the issue area. For instance, in Mistral, completely different specialists would possibly concentrate on understanding sure languages, dialects, and even varieties of queries. The specialization ensures every skilled is proficient in its area of interest, which, when mixed with the contributions of different specialists, will result in superior efficiency throughout a big selection of duties.

MoE Loss Perform

Though not thought-about a fundamental element of the MoE structure, the loss perform performs a pivotal position sooner or later efficiency of the mannequin, because it’s designed to optimize each the person specialists and the gating community.

It sometimes combines the losses computed for every skilled that are weighted by the chance or significance assigned to them by the gating community. This helps to fine-tune the specialists for his or her particular duties whereas adjusting the gating community to enhance routing accuracy.

The MoE Course of Begin to End

Now let’s sum up all the course of, including extra particulars.

Here is a summarized clarification of how the routing course of works from begin to end:

- Enter Processing: Preliminary dealing with of incoming knowledge. Primarily our Immediate within the case of LLMs.

- Function Extraction: Remodeling uncooked enter for evaluation.

- Gating Community Analysis: Assessing skilled suitability by way of chances or weights.

- Weighted Routing: Allocating enter based mostly on computed weights. Right here, the method of selecting probably the most appropriate LLM is accomplished. In some instances, a number of LLMs are chosen to reply a single enter.

- Job Execution: Processing allotted enter by every skilled.

- Integration of Professional Outputs: Combining particular person skilled outcomes for closing output.

- Suggestions and Adaptation: Utilizing efficiency suggestions to enhance fashions.

- Iterative Optimization: Steady refinement of routing and mannequin parameters.

Widespread Fashions that Make the most of an MoE Structure

- OpenAI’s GPT-4 and GPT-4o: GPT-4 and GPT4o energy the premium model of ChatGPT. These multi-modal fashions make the most of MoE to have the ability to ingest completely different supply mediums like pictures, textual content, and voice. It’s rumored and barely confirmed that GPT-4 has 8 specialists every with 220 billion paramters totalling all the mannequin to over 1.7 trillion parameters.

- Mistral AI’s Mixtral 8x7b: Mistral AI delivers very sturdy AI fashions open supply and have mentioned their Mixtral mannequin is a sMoE mannequin or sparse Combination of Consultants mannequin delivered in a small bundle. Mixtral 8x7b has a complete of 46.7 billion parameters however solely makes use of 12.9B parameters per token, thus processing inputs and outputs at that value. Their MoE mannequin constantly outperforms Llama2 (70B) and GPT-3.5 (175B) whereas costing much less to run.

The Advantages of MoE and Why It is the Most well-liked Structure

Finally, the primary objective of MoE structure is to current a paradigm shift in how advanced machine studying duties are approached. It provides distinctive advantages and demonstrates its superiority over conventional fashions in a number of methods.

- Enhanced Mannequin Scalability

- Every skilled is liable for part of a job, due to this fact scaling by including specialists will not incur a proportional enhance in computational calls for.

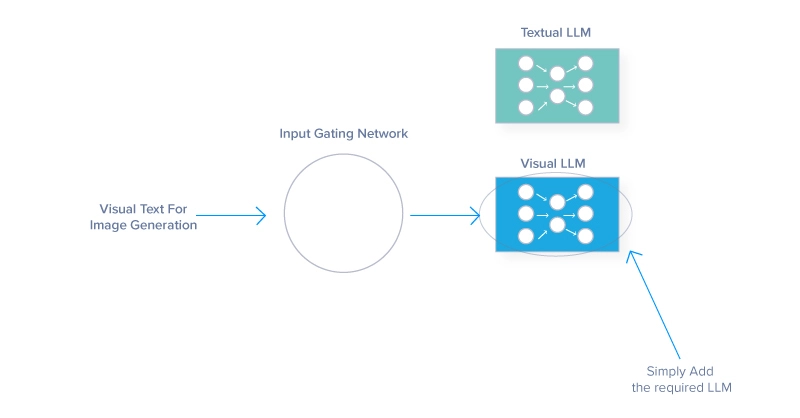

- This modular method can deal with bigger and extra numerous datasets and facilitates parallel processing, rushing up operations. For example, including a picture recognition mannequin to a text-based mannequin can combine an extra LLM skilled for deciphering photos whereas nonetheless having the ability to output textual content. Or

- Versatility permits the mannequin to broaden its capabilities throughout several types of knowledge inputs.

- Improved Effectivity and Flexibility

- MoE fashions are extraordinarily environment friendly, selectively participating solely obligatory specialists for particular inputs, in contrast to typical architectures that use all their parameters regardless.

- The structure reduces the computational load per inference, permitting the mannequin to adapt to various knowledge sorts and specialised duties.

- Specialization and Accuracy:

- Every skilled in an MoE system might be finely tuned to particular facets of the general downside, resulting in better experience and accuracy in these areas

- Specialization like that is useful in fields like medical imaging or monetary forecasting, the place precision is essential

- MoE can generate higher outcomes from slender domains attributable to its nuanced understanding, detailed information, and the flexibility to outperform generalist fashions on specialised duties.

The Downsides of The MoE Structure

Whereas MoE structure provides vital benefits, it additionally comes with challenges that may affect its adoption and effectiveness.

- Mannequin Complexity: Managing a number of neural community specialists and a gating community for guiding site visitors makes MoE improvement and operational prices difficult

- Coaching Stability: Interplay between the gating community and the specialists introduces unpredictable dynamics that hinder attaining uniform studying charges and require intensive hyperparameter tuning.

- Imbalance: Leaving specialists idle is poor optimization for the MoE mannequin, spending sources on specialists that aren’t in use or counting on sure specialists an excessive amount of. Balancing the workload distribution and tuning an efficient gate is essential for a high-performing MoE AI.

It needs to be famous that the above drawbacks normally diminish over time as MoE structure is improved.

The Future Formed by Specialization

Reflecting on the MoE method and its human parallel, we see that simply as specialised groups obtain greater than a generalized workforce, specialised fashions outperform their monolithic counterparts in AI fashions. Prioritizing range and experience turns the complexity of large-scale issues into manageable segments that specialists can sort out successfully.

As we glance to the long run, think about the broader implications of specialised methods in advancing different applied sciences. The rules of MoE may affect developments in sectors like healthcare, finance, and autonomous methods, selling extra environment friendly and correct options.

The journey of MoE is simply starting, and its continued evolution guarantees to drive additional innovation in AI and past. As high-performance {hardware} continues to advance, this combination of skilled AIs can reside in our smartphones, able to delivering even smarter experiences. However first, somebody’s going to want to coach one.

Kevin Vu manages Exxact Corp weblog and works with a lot of its proficient authors who write about completely different facets of Deep Studying.