Picture by Editor | Midjourney

This tutorial demonstrates use Hugging Face’s Datasets library for loading datasets from completely different sources with just some traces of code.

Hugging Face Datasets library simplifies the method of loading and processing datasets. It supplies a unified interface for hundreds of datasets on Hugging Face’s hub. The library additionally implements varied efficiency metrics for transformer-based mannequin analysis.

Preliminary Setup

Sure Python improvement environments might require putting in the Datasets library earlier than importing it.

!pip set up datasets

import datasets

Loading a Hugging Face Hub Dataset by Title

Hugging Face hosts a wealth of datasets in its hub. The next perform outputs an inventory of those datasets by identify:

from datasets import list_datasets

list_datasets()

Let’s load considered one of them, specifically the feelings dataset for classifying feelings in tweets, by specifying its identify:

information = load_dataset("jeffnyman/emotions")

If you happen to wished to load a dataset you got here throughout whereas shopping Hugging Face’s web site and are not sure what the correct naming conference is, click on on the “copy” icon beside the dataset identify, as proven beneath:

The dataset is loaded right into a DatasetDict object that incorporates three subsets or folds: prepare, validation, and take a look at.

DatasetDict({

prepare: Dataset({

options: ['text', 'label'],

num_rows: 16000

})

validation: Dataset({

options: ['text', 'label'],

num_rows: 2000

})

take a look at: Dataset({

options: ['text', 'label'],

num_rows: 2000

})

})

Every fold is in flip a Dataset object. Utilizing dictionary operations, we are able to retrieve the coaching information fold:

train_data = all_data["train"]

The size of this Dataset object signifies the variety of coaching cases (tweets).

Resulting in this output:

Getting a single occasion by index (e.g. the 4th one) is as straightforward as mimicking an inventory operation:

which returns a Python dictionary with the 2 attributes within the dataset appearing because the keys: the enter tweet textual content, and the label indicating the emotion it has been categorised with.

{'textual content': 'i'm ever feeling nostalgic in regards to the fire i'll know that it's nonetheless on the property',

'label': 2}

We will additionally get concurrently a number of consecutive cases by slicing:

This operation returns a single dictionary as earlier than, however now every key has related an inventory of values as an alternative of a single worth.

{'textual content': ['i didnt feel humiliated', ...],

'label': [0, ...]}

Final, to entry a single attribute worth, we specify two indexes: one for its place and one for the attribute identify or key:

Loading Your Personal Information

If as an alternative of resorting to Hugging Face datasets hub you wish to use your individual dataset, the Datasets library additionally permits you to, through the use of the identical ‘load_dataset()’ perform with two arguments: the file format of the dataset to be loaded (similar to “csv”, “text”, or “json”) and the trail or URL it’s situated in.

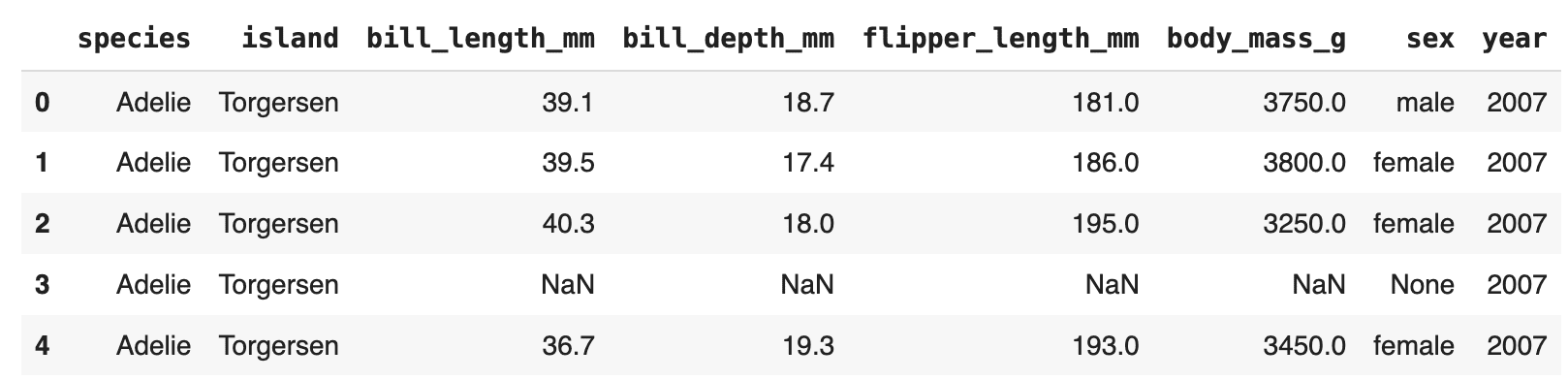

This instance masses the Palmer Archipelago Penguins dataset from a public GitHub repository:

url = "https://raw.githubusercontent.com/allisonhorst/palmerpenguins/master/inst/extdata/penguins.csv"

dataset = load_dataset('csv', data_files=url)

Flip Dataset Into Pandas DataFrame

Final however not least, it’s generally handy to transform your loaded information right into a Pandas DataFrame object, which facilitates information manipulation, evaluation, and visualization with the intensive performance of the Pandas library.

penguins = dataset["train"].to_pandas()

penguins.head()

Now that you’ve got realized effectively load datasets utilizing Hugging Face’s devoted library, the following step is to leverage them through the use of Giant Language Fashions (LLMs).

Iván Palomares Carrascosa is a pacesetter, author, speaker, and adviser in AI, machine studying, deep studying & LLMs. He trains and guides others in harnessing AI in the true world.