Picture generated with Midjourney

As an information skilled, it’s important to know the right way to course of your information. Within the trendy period, it means utilizing programming language to shortly manipulate our information set to attain our anticipated outcomes.

Python is the most well-liked programming language information professionals use, and lots of libraries are useful for information manipulation. From a easy vector to parallelization, every use case has a library that would assist.

So, what are these Python libraries which are important for Information Manipulation? Let’s get into it.

1.NumPy

The primary library we’d focus on is NumPy. NumPy is an open-source library for scientific computing exercise. It was developed in 2005 and has been utilized in many information science circumstances.

NumPy is a well-liked library, offering many useful options in scientific computing actions corresponding to array objects, vector operations, and mathematical capabilities. Additionally, many information science use circumstances depend on a posh desk and matrices calculation, so NumPy permits customers to simplify the calculation course of.

Let’s attempt NumPy with Python. Many information science platforms, corresponding to Anaconda, have Numpy put in by default. However you’ll be able to at all times set up them through Pip.

After the set up, we’d create a easy array and carry out array operations.

import numpy as np

a = np.array([1, 2, 3])

b = np.array([4, 5, 6])

c = a + b

print(c)

Output: [5 7 9]

We will additionally carry out fundamental statistics calculations with NumPy.

information = np.array([1, 2, 3, 4, 5, 6, 7])

imply = np.imply(information)

median = np.median(information)

std_dev = np.std(information)

print(f"The data mean:{mean}, median:{median} and standard deviation: {std_dev}")

The information imply:4.0, median:4.0, and normal deviation: 2.0

It’s additionally attainable to carry out linear algebra operations corresponding to matrix calculation.

x = np.array([[1, 2], [3, 4]])

y = np.array([[5, 6], [7, 8]])

dot_product = np.dot(x, y)

print(dot_product)

Output:

[[19 22]

[43 50]]

There are such a lot of advantages you are able to do utilizing NumPy. From dealing with information to advanced calculations, it’s no marvel many libraries have NumPy as their base.

2. Pandas

Pandas is the most well-liked information manipulation Python library for information professionals. I’m certain that lots of the information science studying courses would use Pandas as their foundation for any subsequent course of.

Pandas are well-known as a result of they’ve intuitive APIs but are versatile, so many information manipulation issues can simply solved utilizing the Pandas library. Pandas permits the person to carry out information operations and analyze information from numerous enter codecs corresponding to CSV, Excel, SQL databases, or JSON.

Pandas are constructed on high of NumPy, so NumPy object properties nonetheless apply to any Pandas object.

Let’s attempt on the library. Like NumPy, it’s normally obtainable by default if you’re utilizing a Information Science platform corresponding to Anaconda. Nevertheless, you’ll be able to comply with the Pandas Set up information if you’re not sure.

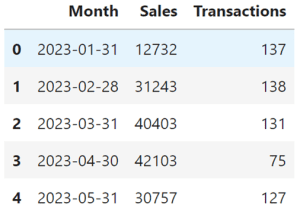

You’ll be able to attempt to provoke the dataset from the NumPy object and get a DataFrame object (Desk-like) that exhibits the highest 5 rows of information with the next code.

import numpy as np

import pandas as pd

np.random.seed(0)

months = pd.date_range(begin="2023-01-01", intervals=12, freq='M')

gross sales = np.random.randint(10000, 50000, measurement=12)

transactions = np.random.randint(50, 200, measurement=12)

information = {

'Month': months,

'Gross sales': gross sales,

'Transactions': transactions

}

df = pd.DataFrame(information)

df.head()

Then you’ll be able to attempt a number of information manipulation actions, corresponding to information choice.

df[df['Transactions'] <100]

It’s attainable to do the Information calculation.

total_sales = df['Sales'].sum()

average_transactions = df['Transactions'].imply()

Performing information cleansing with Pandas can also be straightforward.

df = df.dropna()

df = df.fillna(df.imply())

There’s a lot to do with Pandas for Information Manipulation. Take a look at Bala Priya article on utilizing Pandas for Information Manipulation to be taught additional.

3. Polars

Polars is a comparatively new information manipulation Python library designed for the swift evaluation of enormous datasets. Polars boast 30x efficiency positive factors in comparison with Pandas in a number of benchmark assessments.

Polars is constructed on high of the Apache Arrow, so it’s environment friendly for reminiscence administration of the massive dataset and permits for parallel processing. It additionally optimize their information manipulation efficiency utilizing lazy execution that delays and computational till it’s crucial.

For the Polars set up, you should use the next code.

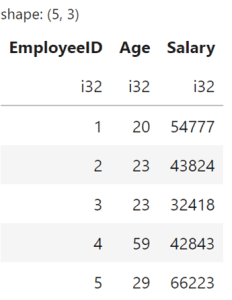

Like Pandas, you’ll be able to provoke the Polars DataFrame with the next code.

import numpy as np

import polars as pl

np.random.seed(0)

employee_ids = np.arange(1, 101)

ages = np.random.randint(20, 60, measurement=100)

salaries = np.random.randint(30000, 100000, measurement=100)

df = pl.DataFrame({

'EmployeeID': employee_ids,

'Age': ages,

'Wage': salaries

})

df.head()

Nevertheless, there are variations in how we use Polars to control information. For instance, right here is how we choose information with Polars.

df.filter(pl.col('Age') > 40)

The API is significantly extra advanced than Pandas, however it’s useful when you require quick execution for big datasets. However, you wouldn’t get the profit if the information measurement is small.

To know the main points, you’ll be able to confer with Josep Ferrer’s article on how totally different Polars is are in comparison with Pandas.

4. Vaex

Vaex is much like Polars because the library is developed particularly for appreciable dataset information manipulation. Nevertheless, there are variations in the best way they course of the dataset. For instance, Vaex make the most of memory-mapping methods, whereas Polars give attention to a multi-threaded strategy.

Vaex is optimally appropriate for datasets which are means larger than what Polars supposed to make use of. Whereas Polars can also be for intensive dataset manipulation processing, the library is ideally on datasets that also match into reminiscence measurement. On the similar time, Vaex could be nice to make use of on datasets that exceed the reminiscence.

For the Vaex set up, it’s higher to confer with their documentation, because it might break your system if it’s not executed appropriately.

5. CuPy

CuPy is an open-source library that permits GPU-accelerated computing in Python. It’s CuPy that was designed for the NumPy and SciPy alternative if you’ll want to run the calculation inside NVIDIA CUDA or AMD ROCm platforms.

This makes CuPy nice for purposes that require intense numerical computation and wish to make use of GPU acceleration. CuPy might make the most of the parallel structure of GPU and is useful for large-scale computations.

To put in CuPy, confer with their GitHub repository, as many obtainable variations may or may not go well with the platforms you utilize. For instance, beneath is for the CUDA platform.

The APIs are much like NumPy, so you should use CuPy immediately if you’re already aware of NumPy. For instance, the code instance for CuPy calculation is beneath.

import cupy as cp

x = cp.arange(10)

y = cp.array([2] * 10)

z = x * y

print(cp.asnumpy(z))

CuPy is the top of a necessary Python library if you’re repeatedly working with high-scale computational information.

Conclusion

All of the Python libraries now we have explored are important in sure use circumstances. NumPy and Pandas is likely to be the fundamentals, however libraries like Polars, Vaex, and CuPy could be useful in particular environments.

If in case you have some other library you deem important, please share them within the feedback!

Cornellius Yudha Wijaya is an information science assistant supervisor and information author. Whereas working full-time at Allianz Indonesia, he likes to share Python and information ideas through social media and writing media. Cornellius writes on a wide range of AI and machine studying matters.