New analysis from China has proposed a way for enhancing the standard of pictures generated by Latent Diffusion Fashions (LDMs) fashions corresponding to Steady Diffusion.

The tactic focuses on optimizing the salient areas of a picture – areas most certainly to draw human consideration.

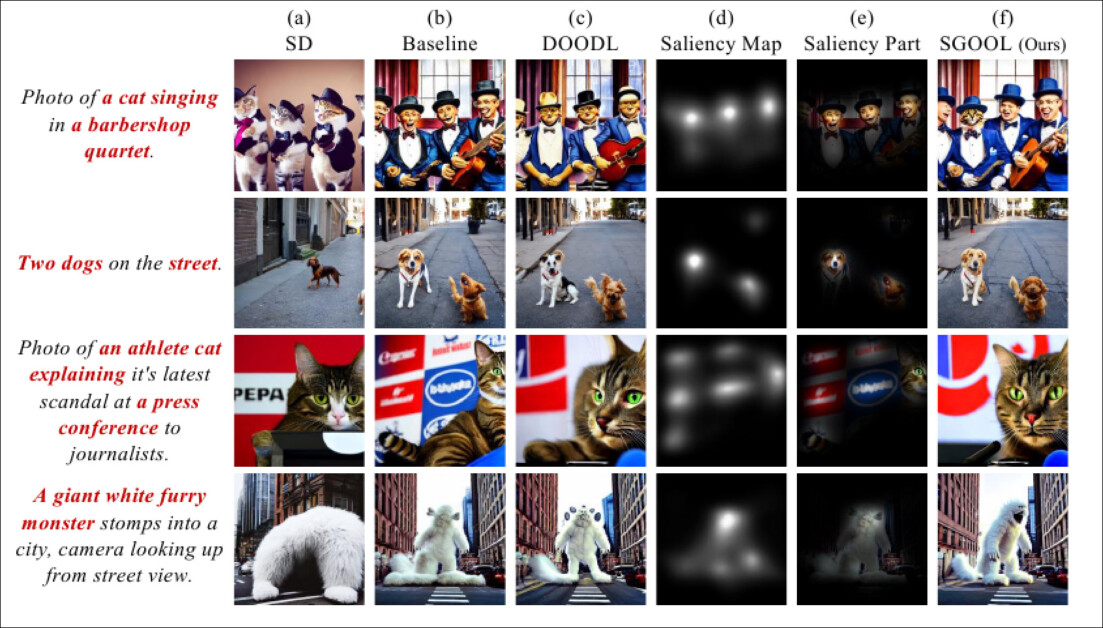

The brand new analysis has discovered that saliency maps (fourth column from left) can be utilized as a filter, or ‘masks’, for steering the locus of consideration in denoising processes in the direction of areas of the picture that people are most certainly to concentrate to. Supply: https://arxiv.org/pdf/2410.10257

Conventional strategies, optimize the complete picture uniformly, whereas the brand new method leverages a saliency detector to establish and prioritize extra ‘necessary’ areas, as people do.

In quantitative and qualitative checks, the researchers’ technique was in a position to outperform prior diffusion-based fashions, each when it comes to picture high quality and constancy to textual content prompts.

The brand new method additionally scored finest in a human notion trial with 100 individuals.

Pure Choice

Saliency, the flexibility to prioritize info in the actual world and in pictures, is an important half of human imaginative and prescient.

A easy instance of that is the elevated consideration to element that classical artwork assigns to necessary areas of a portray, such because the face, in a portrait, or the masts of a ship, in a sea-based topic; in such examples, the artist’s consideration converges on the central material, that means that broad particulars corresponding to a portrait background or the distant waves of a storm are sketchier and extra broadly consultant than detailed.

Knowledgeable by human research, machine studying strategies have arisen over the past decade that may replicate or a minimum of approximate this human locus of curiosity in any image.

Object segmentation (semantic segmentation) might be an aide in individuating sides of a picture, and creating corresponding saliency maps. Supply: https://arxiv.org/pdf/1312.6034

Within the run of analysis literature, the preferred saliency map detector over the past 5 years has been the 2016 Gradient-weighted Class Activation Mapping (Grad-CAM) initiative, which later developed into the improved Grad-CAM++ system, amongst different variants and refinements.

Grad-CAM makes use of the gradient activation of a semantic token (corresponding to ‘canine’ or ‘cat’) to provide a visible map of the place the idea or annotation appears more likely to be represented within the picture.

Examples from the unique Grad-CAM paper. Within the second column, guided backpropagation individuates all contributing options. Within the third column, the semantic maps are drawn for the 2 ideas ‘canine’ and ‘cat’. The fourth column represents the concatenation of the earlier two inferences. The fifth, the occlusion (masking) map that corresponds to the inference; and at last, within the sixth column, Grad-CAM visualizes a ResNet-18 layer. Supply: https://arxiv.org/pdf/1610.02391

Human surveys on the outcomes obtained by these strategies have revealed a correspondence between these mathematical individuations of key curiosity factors in a picture, and human consideration (when scanning the picture).

SGOOL

The new paper considers what saliency can convey to text-to-image (and, doubtlessly, text-to-video) methods corresponding to Steady Diffusion and Flux.

When decoding a consumer’s text-prompt, Latent Diffusion Fashions discover their skilled latent house for discovered visible ideas that correspond with the phrases or phrases used. They then parse these discovered data-points by way of a denoising course of, the place random noise is step by step developed right into a inventive interpretation of the consumer’s text-prompt.

At this level, nevertheless, the mannequin provides equal consideration to each single a part of the picture. Because the popularization of diffusion fashions in 2022, with the launch of OpenAI’s obtainable Dall-E picture turbines, and the following open-sourcing of Stability.ai’s Steady Diffusion framework, customers have discovered that ‘important’ sections of a picture are sometimes under-served.

Contemplating that in a typical depiction of a human, the individual’s face (which is of most significance to the viewer) is more likely to occupy not more than 10-35% of the entire picture, this democratic technique of consideration dispersal works in opposition to each the character of human notion and the historical past of artwork and pictures.

When the buttons on an individual’s denims obtain the identical computing heft as their eyes, the allocation of sources may very well be mentioned to be non-optimal.

Subsequently, the brand new technique proposed by the authors, titled Saliency Guided Optimization of Diffusion Latents (SGOOL), makes use of a saliency mapper to extend consideration on uncared for areas of an image, devoting fewer sources to sections more likely to stay on the periphery of the viewer’s consideration.

Technique

The SGOOL pipeline consists of picture era, saliency mapping, and optimization, with the general picture and saliency-refined picture collectively processed.

Conceptual schema for SGOOL.

The diffusion mannequin’s latent embeddings are optimized straight with fine-tuning, eradicating the necessity to practice a selected mannequin. Stanford College’s Denoising Diffusion Implicit Mannequin (DDIM) sampling technique, acquainted to customers of Steady Diffusion, is customized to include the secondary info supplied by saliency maps.

The paper states:

‘We first make use of a saliency detector to imitate the human visible consideration system and mark out the salient areas. To keep away from retraining an extra mannequin, our technique straight optimizes the diffusion latents.

‘In addition to, SGOOL makes use of an invertible diffusion course of and endows it with the deserves of fixed reminiscence implementation. Therefore, our technique turns into a parameter-efficient and plug-and-play fine-tuning technique. Intensive experiments have been achieved with a number of metrics and human analysis.’

Since this technique requires a number of iterations of the denoising course of, the authors adopted the Direct Optimization Of Diffusion Latents (DOODL) framework, which gives an invertible diffusion course of – although it nonetheless applies consideration to the whole thing of the picture.

To outline areas of human curiosity, the researchers employed the College of Dundee’s 2022 TransalNet framework.

Examples of saliency detection from the 2022 TransalNet venture. Supply: https://discovery.dundee.ac.uk/ws/portalfiles/portal/89737376/1_s2.0_S0925231222004714_main.pdf

The salient areas processed by TransalNet have been then cropped to generate conclusive saliency sections more likely to be of most curiosity to precise folks.

The distinction between the consumer textual content and the picture must be thought-about, when it comes to defining a loss perform that may decide if the method is working. For this, a model of OpenAI’s Contrastive Language–Picture Pre-training (CLIP) – by now a mainstay of the picture synthesis analysis sector – was used, along with consideration of the estimated semantic distance between the textual content immediate and the worldwide (non-saliency) picture output.

The authors assert:

‘[The] last loss [function] regards the relationships between saliency components and the worldwide picture concurrently, which helps to steadiness native particulars and world consistency within the era course of.

‘This saliency-aware loss is leveraged to optimize picture latent. The gradients are computed on the noised [latent] and leveraged to boost the conditioning impact of the enter immediate on each salient and world points of the unique generated picture.’

Knowledge and Checks

To check SGOOL, the authors used a ‘vanilla’ distribution of Steady Diffusion V1.4 (denoted as ‘SD’ in take a look at outcomes) and Steady Diffusion with CLIP steerage (denoted as ‘baseline’ in outcomes).

The system was evaluated in opposition to three public datasets: CommonSyntacticProcesses (CSP), DrawBench, and DailyDallE*.

The latter incorporates 99 elaborate prompts from an artist featured in one among OpenAI’s weblog posts, whereas DrawBench gives 200 prompts throughout 11 classes. CSP consists of 52 prompts primarily based on eight various grammatical instances.

For SD, baseline and SGOOL, within the checks, the CLIP mannequin was used over ViT/B-32 to generate the picture and textual content embeddings. The identical immediate and random seed was used. The output dimension was 256×256, and the default weights and settings of TransalNet have been employed.

In addition to the CLIP rating metric, an estimated Human Choice Rating (HPS) was used, along with a real-world examine with 100 individuals.

Quantitative outcomes evaluating SGOOL to prior configurations.

In regard to the quantitative outcomes depicted within the desk above, the paper states:

‘[Our] mannequin considerably outperforms SD and Baseline on all datasets underneath each CLIP rating and HPS metrics. The common outcomes of our mannequin on CLIP rating and HPS are 3.05 and 0.0029 increased than the second place, respectively.’

The authors additional estimated the field plots of the HPS and CLIP scores in respect to the earlier approaches:

Field plots for the HPS and CLIP scores obtained within the checks.

They remark:

‘It may be seen that our mannequin outperforms the opposite fashions, indicating that our mannequin is extra able to producing pictures which are in keeping with the prompts.

‘Nevertheless, within the field plot, it isn’t straightforward to visualise the comparability from the field plot as a result of dimension of this analysis metric at [0, 1]. Subsequently, we proceed to plot the corresponding bar plots.

‘It may be seen that SGOOL outperforms SD and Baseline on all datasets underneath each CLIP rating and HPS metrics. The quantitative outcomes show that our mannequin can generate extra semantically constant and human-preferred pictures.’

The researchers word that whereas the baseline mannequin is ready to enhance the standard of picture output, it doesn’t contemplate the salient areas of the picture. They contend that SGOOL, in arriving at a compromise between world and salient picture analysis, obtains higher pictures.

In qualitative (automated) comparisons, the variety of optimizations was set to 50 for SGOOL and DOODL.

Qualitative outcomes for the checks. Please consult with the supply paper for higher definition.

Right here the authors observe:

‘Within the [first row], the themes of the immediate are “a cat singing” and “a barbershop quartet”. There are 4 cats within the picture generated by SD, and the content material of the picture is poorly aligned with the immediate.

‘The cat is ignored within the picture generated by Baseline, and there’s a lack of element within the portrayal of the face and the main points within the picture. DOODL makes an attempt to generate a picture that’s in keeping with the immediate.

‘Nevertheless, since DOODL optimizes the worldwide picture straight, the individuals within the picture are optimized towards the cat.’

They additional word that SGOOL, against this, generates pictures which are extra in keeping with the unique immediate.

Within the human notion take a look at, 100 volunteers evaluated take a look at pictures for high quality and semantic consistency (i.e., how intently they adhered to their supply text-prompts). The individuals had limitless time to make their decisions.

Outcomes for the human notion take a look at.

Because the paper factors out, the authors’ technique is notably most popular over the prior approaches.

Conclusion

Not lengthy after the shortcomings addressed on this paper grew to become evident in native installations of Steady Diffusion, varied bespoke strategies (corresponding to After Detailer) emerged to pressure the system to use further consideration to areas that have been of larger human curiosity.

Nevertheless, this sort of method requires that the diffusion system initially undergo its regular technique of making use of equal consideration to each a part of the picture, with the elevated work being achieved as an additional stage.

The proof from SGOOL means that making use of primary human psychology to the prioritization of picture sections may tremendously improve the preliminary inference, with out post-processing steps.

* The paper gives the identical hyperlink for this as for CommonSyntacticProcesses.

First revealed Wednesday, October 16, 2024