Introduction

Retriever is an important a part of the RAG(Retrieval Augmented Era) pipeline. On this article, you’ll implement a customized retriever combining Key phrase and Vector search retriever utilizing LlamaIndex. Chat with A number of Paperwork utilizing Gemini LLM is the undertaking use case on which we are going to construct this RAG pipeline. To start with the undertaking, we are going to first perceive just a few important elements such because the Service and Storage context to construct such an utility.

Studying Targets

- Acquire insights into the RAG pipeline, understanding the roles of Retriever and Generator elements in contextually producing responses.

- Be taught to combine Key phrase and Vector Search strategies to develop a customized retriever, enhancing search accuracy in RAG functions.

- Purchase proficiency in using LlamaIndex for information ingestion, offering context to LLMs, and deepening the connection to customized information.

- Perceive the importance of customized retrievers in mitigating hallucinations in LLM responses via hybrid search mechanisms.

- Discover superior retriever implementations comparable to reranking and HyDE to boost doc relevance in RAG.

- Be taught to combine Gemini LLM and embeddings inside LlamaIndex for response era and information storage, bettering RAG capabilities.

- Develop decision-making abilities for customized retriever configuration, together with deciding on between AND and OR operations for search consequence optimization.

This text was printed as part of the Knowledge Science Blogathon.

What’s LlamaIndex?

The sphere of Massive Language Fashions is increasing quickly, bettering considerably every day. With an growing variety of fashions being launched at a quick tempo, there’s a rising must combine these fashions with customized information. This integration supplies companies, enterprises, and end-users with extra flexibility and a deeper connection to their information.

LlamaIndex, initially generally known as GPT-index, is an information framework designed in your LLM functions. As the recognition of constructing customized data-driven chatbots like ChatGPT continues to rise, frameworks like LlamaIndex change into more and more worthwhile. At its core, LlamaIndex supplies numerous information connectors to facilitate information ingestion. On this article, we are going to discover how we are able to move our information as context to the LLM, this idea is what we imply by Retrieval Augmented Era, RAG briefly.

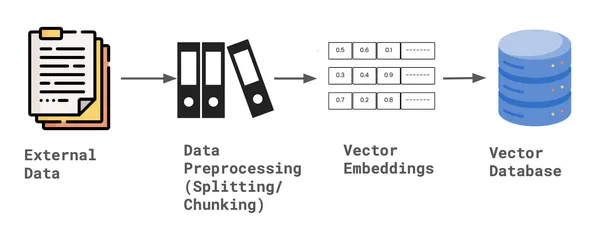

What’s RAG?

In Retrieval Augmented Era briefly RAG, there are two main elements: Retriever and Generator.

- Retriever will be the vector database, it’s job is to retrieve the related paperwork to the person question and move it as a context to the immediate.

- Generator mannequin is a Massive Language mannequin, it’s job is to take the retrieved paperwork together with immediate to generate significant response from the context.

This fashion RAG is the optimum answer for the in context studying through Automated Few shot prompting.

Significance of Retriever

Let’s perceive the significance of Retriever element in RAG pipeline.

To develop a customized retriever, it’s essential to find out the kind of retriever that most accurately fits our wants. For our functions, we are going to implement a Hybrid Search that integrates each Key phrase Search and Vector Search.

Vector Search identifies related paperwork for a person’s question primarily based on similarity or semantic search, whereas Key phrase Search finds paperwork primarily based on the frequency of time period incidence.This integration will be achieved in two methods utilizing LlamaIndex. When constructing the customized retriever for Hybrid Search, a vital choice is selecting between utilizing an AND or an OR operation:

- AND operation: This strategy retrieves paperwork that embody all the required phrases, making it extra restrictive however guaranteeing excessive relevance. You’ll be able to think about this as intersection of outcomes between Key phrase Search and Vector Search.

- OR operation: This methodology retrieves paperwork that include any of the required phrases, growing the breadth of outcomes however doubtlessly lowering relevance. You’ll be able to assume this as union of outcomes between Key phrase Search and Vector Search.

Constructing Customized Retriever utilizing LLamaIndex

Allow us to now construct buyer retriever utilizing LlamaIndex. To construct this we have to comply with sure steps.

Step1: Set up

To get began with the code implementation on Google Colab or Jupyter Pocket book, one wants to put in the required libraries primarily in our case we are going to use LlamaIndex for constructing a customized retriever, Gemini for the embedding mannequin and LLM inference, and PyPDF for the information connector.

!pip set up llama-index

!pip set up llama-index-multi-modal-llms-gemini

!pip set up llama-index-embeddings-geminiStep2: Setup Google API key

On this undertaking, we are going to make the most of Google Gemini because the Massive Language Mannequin to generate responses and because the embedding mannequin to transform and retailer information in vector-db or in-memory storage utilizing LlamaIndex.

from getpass import getpass

GOOGLE_API_KEY = getpass("Enter your Google API:")Step3: Load Knowledge and Create Doc Node

In LlamaIndex, information loading is achieved utilizing SimpleDirectoryLoader. First, that you must create a folder and add information in any format into this information folder. In our instance, I’ll add a PDF file into the information folder. As soon as the doc is loaded, it’s parsed into nodes to separate the doc into smaller segments. A node is an information schema outlined inside the LlamaIndex framework.

The most recent model of LlamaIndex has up to date its code construction, which now contains definitions for the node parser, embedding mannequin, and LLM inside the Settings.

from llama_index.core import SimpleDirectoryReader

from llama_index.core import Settings

paperwork = SimpleDirectoryReader('information').load_data()

nodes = Settings.node_parser.get_nodes_from_documents(paperwork)Step4: Setup Embedding Mannequin and Massive Language Mannequin

Gemini helps numerous fashions, together with gemini-pro, gemini-1.0-pro, gemini-1.5, imaginative and prescient mannequin, amongst others. On this case, we are going to use the default mannequin and supply the Google API key. For the embedding mannequin in Gemini, we’re presently utilizing embedding-001. Make sure that a sound API secret’s added.

from llama_index.embeddings.gemini import GeminiEmbedding

from llama_index.llms.gemini import Gemini

Settings.embed_model = GeminiEmbedding(

model_name="models/embedding-001", api_key=GOOGLE_API_KEY

)

Settings.llm = Gemini(api_key=GOOGLE_API_KEY)Step5: Outline Storage Context and Retailer Knowledge

As soon as the information is parsed into nodes, LlamaIndex supplies a Storage Context, which provides default doc storage for storing the vector embeddings of the information. This storage context retains the information in-memory, permitting it to be listed later.

from llama_index.core import StorageContext

storage_context = StorageContext.from_defaults()

storage_context.docstore.add_documents(nodes)Create Index- Key phrase and Index

For constructing the customized retriever to carry out hybrid search we have to create two indexes. First Vector Index that may carry out vector search, second Key phrase index that may carry out key phrase search. So as to create the index, we required the storage context and the node paperwork, together with default Settings of embedding mannequin and LLM.

from llama_index.core import SimpleKeywordTableIndex, VectorStoreIndex

vector_index = VectorStoreIndex(nodes, storage_context=storage_context)

keyword_index = SimpleKeywordTableIndex(nodes, storage_context=storage_context)Step6: Assemble Customized Retriever

To assemble a customized retriever for hybrid search utilizing LlamaIndex, we first must outline the schema, particularly by appropriately configuring the nodes. For the retriever, each a Vector Index Retriever and a Key phrase Retriever are required. This enables us to carry out hybrid searches, integrating each strategies to attenuate hallucinations. Moreover, we should specify the mode—both AND or OR—relying on how we need to mix the outcomes.

As soon as the nodes are configured, we question the bundle for every node ID utilizing each the vector and key phrase retrievers. Based mostly on the chosen mode, we then outline and finalize the customized retriever.

from llama_index.core import QueryBundle

from llama_index.core.schema import NodeWithScore

from llama_index.core.retrievers import (

BaseRetriever,

VectorIndexRetriever,

KeywordTableSimpleRetriever,

)

from typing import Listing

class CustomRetriever(BaseRetriever):

def __init__(

self,

vector_retriever: VectorIndexRetriever,

keyword_retriever: KeywordTableSimpleRetriever,

mode: str = "AND") -> None:

self._vector_retriever = vector_retriever

self._keyword_retriever = keyword_retriever

if mode not in ("AND", "OR"):

increase ValueError("Invalid mode.")

self._mode = mode

tremendous().__init__()

def _retrieve(self, query_bundle: QueryBundle) -> Listing[NodeWithScore]:

vector_nodes = self._vector_retriever.retrieve(query_bundle)

keyword_nodes = self._keyword_retriever.retrieve(query_bundle)

vector_ids = {n.node.node_id for n in vector_nodes}

keyword_ids = {n.node.node_id for n in keyword_nodes}

combined_dict = {n.node.node_id: n for n in vector_nodes}

combined_dict.replace({n.node.node_id: n for n in keyword_nodes})

if self._mode == "AND":

retrieve_ids = vector_ids.intersection(keyword_ids)

else:

retrieve_ids = vector_ids.union(keyword_ids)

retrieve_nodes = [combined_dict[r_id] for r_id in retrieve_ids]

return retrieve_nodesStep7: Outline Retrievers

Now that the customized retriever class is outlined, we have to instantiate the retriever and synthesize the question engine. A Response Synthesizer is used to generate a response from an LLM primarily based on a person question and a given set of textual content chunks. The output from a Response Synthesizer is a Response object, that takes customized retriever as one of many parameter.

from llama_index.core import get_response_synthesizer

from llama_index.core.query_engine import RetrieverQueryEngine

vector_retriever = VectorIndexRetriever(index=vector_index, similarity_top_k=2)

keyword_retriever = KeywordTableSimpleRetriever(index=keyword_index)

# customized retriever => mix vector and key phrase retriever

custom_retriever = CustomRetriever(vector_retriever, keyword_retriever)

# outline response synthesizer

response_synthesizer = get_response_synthesizer()

custom_query_engine = RetrieverQueryEngine(

retriever=custom_retriever,

response_synthesizer=response_synthesizer,

)Step8: Run Customized Retriever Question Engine

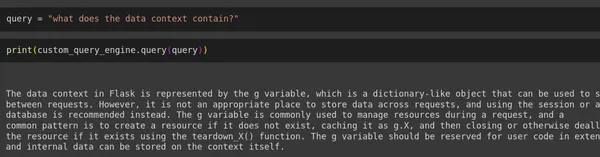

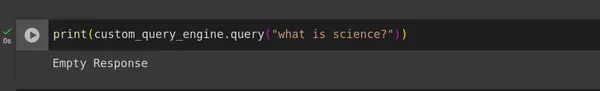

Lastly, we’ve got developed our customized retriever that considerably reduces hallucinations. To check its effectiveness, we ran person queries together with one immediate from inside the context and one other from exterior the context, then evaluated the responses generated.

question = "what does the data context contain?"

print(custom_query_engine.question(question))

print(custom_query_engine.question("what is science?"))Output

Conclusion

Now we have efficiently carried out a customized retriever that performs Hybrid Search by combining Vector and Key phrase retrievers utilizing LlamaIndex, with the assist of Gemini LLM and Embeddings. This strategy successfully reduces LLM hallucinations to some extent in a typical RAG pipeline.

Key Takeaways

- Growth of a customized retriever that integrates each Vector and Key phrase retrievers, bettering the search capabilities and accuracy in figuring out related paperwork for RAG.

- Implementing Gemini Embedding and LLM utilizing LlamaIndex Settings, which is changed in newest model, beforehand this was finished utilizing Service Context, that’s now deprecated.

- In constructing the customized retriever, a key choice is whether or not to make use of the AND or the OR operation, balancing the intersection and union of Key phrase and Vector Search outcomes based on particular wants.

- The customized retriever setup helps considerably scale back hallucinations in Massive Language Mannequin responses by utilizing a hybrid search mechanism inside the RAG pipeline.

Incessantly Requested Questions

A. Hybrid Search is principally a mixture of key phrase fashion search and a vector fashion search. It has the benefit of doing key phrase search in addition to the benefit of doing a semantic lookup that we get from embeddings and a vector search.

A. In RAG retriever is every part. If the related paperwork will not be returned to the Generator mannequin is nice for nothing. So as to scale back the hallucinations, context must correct. That is the place there are numerous methodology to enhance the Retriever efficiency. Few of it contains: Reranking, Hybrid Search, Sentence Window retrieval, HyDE and so forth.

A. Sure, Hybrid Search will be utilized in Langchain. In Langchain, we are able to outline algorithms comparable to BM25 or TFIDF because the key phrase retrievers and use a vector database as retriever for vector search. As soon as each retrievers are arrange, they are often built-in utilizing the Ensemble Retriever, which facilitates Hybrid Search in Langchain. This mixed strategy can then be fed into the RetrievalQA chain for question processing.

A. There are numerous vector databases able to internally integrating Hybrid Search by using vector search and eliminating the necessity for key phrase search. A few of these vector databases that assist inner Hybrid Search embody Qdrant, Weaviate, Elastic Search, amongst others.

Reference

https://docs.llamaindex.ai/en/steady/examples/query_engine/CustomRetrievers/

The media proven on this article will not be owned by Analytics Vidhya and is used on the Writer’s discretion.