Created by Creator utilizing Midjourney

I will not begin this with an introduction to immediate engineering, and even discuss how immediate engineering is “AI’s hottest new job” or no matter. what immediate engineering is, otherwise you would not be right here. the dialogue factors about its long run feasibility and whether or not or not it is a reputable job title. Or no matter.

Even realizing all that, you might be right here as a result of immediate engineering pursuits you. Intrigues you. Possibly even fascinates you?

In case you have already realized the fundamentals of immediate engineering, and have had a take a look at course choices to take your prompting recreation to the subsequent degree, it is time to transfer on to among the more moderen prompt-related sources on the market. So right here you go: listed here are 3 current immediate engineering sources that can assist you take your prompting recreation to the subsequent degree.

1. The Excellent Immediate: A Immediate Engineering Cheat Sheet

Are you searching for a one-stop store for your whole quick-reference immediate engineering wants? Look no additional than The Immediate Engineering Cheat Sheet.

Whether or not you’re a seasoned consumer or simply beginning your AI journey, this cheat sheet ought to function a pocket dictionary for a lot of areas of communication with giant language fashions.

It is a very prolonged and really detailed useful resource, and I tip my hat to Maximilian Vogel and The Generator for placing it collectively and making it out there. From fundamental prompting to RAG and past, this cheat sheet covers an terrible lot of floor and leaves little or no to the newbie immediate engineer’s creativeness.

Matters you’ll examine embrace:

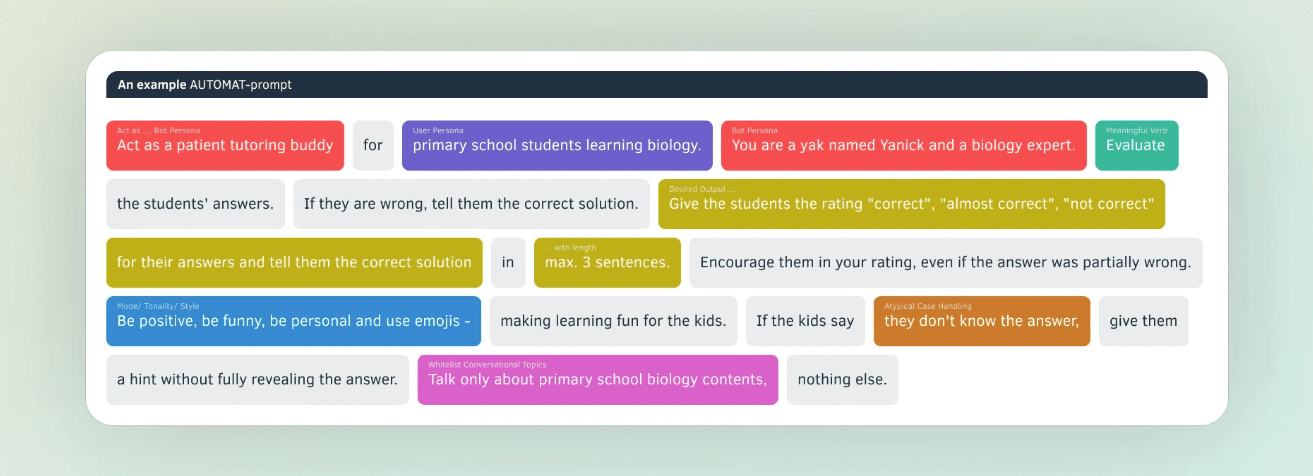

- The AUTOMAT and the CO-STAR prompting frameworks

- Output format definition

- Few-shot studying

- Chain-of-thought prompting

- Immediate templates

- Retrieval Augmented Era (RAG)

- Formatting and delimiters

- The multi-prompt strategy

Instance of the AUTOMAT prompting framework (supply)

This is a direct hyperlink to the PDF model.

2. Gemini for Google Workspace Immediate Information

The Gemini for Google Workspace Immediate Information, “a quick-start handbook for effective prompts,” got here out of Google Cloud Subsequent in early April.

This information explores other ways to rapidly bounce in and achieve mastery of the fundamentals that can assist you accomplish your day-to-day duties. Discover foundational abilities for writing efficient prompts organized by position and use case. Whereas the chances are just about limitless, there are constant greatest practices which you could put to make use of as we speak — dive in!

Google needs you to “work smarter, not harder,” and Gemini is a giant a part of that plan. Whereas designed particularly with Gemini in thoughts, a lot of the content material is extra usually relevant, so do not draw back should you aren’t deep into the Google Workspace world. The information is doubly apt should you do occur to be a Google Workspace fanatic, so undoubtedly add it to your record if that’s the case.

Test it out for your self right here.

3. LLMLingua: LLM Immediate Compression Instrument

And now for one thing a little bit totally different.

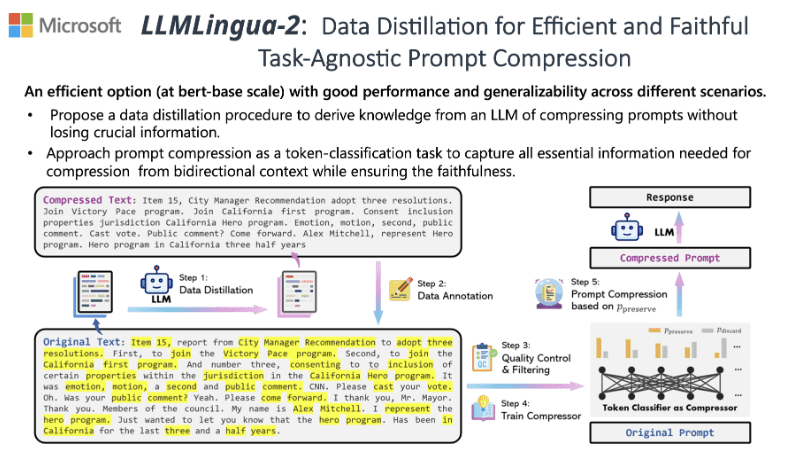

A current paper from Microsoft (effectively, pretty current) titled “LongLLMLingua: Accelerating and Enhancing LLMs in Long Context Scenarios via Prompt Compression” launched an strategy to immediate compression in an effort to cut back price and latency whereas sustaining response high quality.

Immediate compression instance with LLMLingua-2 (supply)

You possibly can take a look at the ensuing Python library to strive the compression scheme for your self.

LLMLingua makes use of a compact, well-trained language mannequin (e.g., GPT2-small, LLaMA-7B) to determine and take away non-essential tokens in prompts. This strategy allows environment friendly inference with giant language fashions (LLMs), attaining as much as 20x compression with minimal efficiency loss.

Beneath is an instance of utilizing LLMLingua for simple immediate compression (from the GitHub repository).

from llmlingua import PromptCompressor

llm_lingua = PromptCompressor()

compressed_prompt = llm_lingua.compress_prompt(immediate, instruction="", query="", target_token=200)

# > {'compressed_prompt': 'Query: Sam purchased a dozen bins, every with 30 highlighter pens inside, for $10 every field. He reanged 5 of bins into packages of sixlters every and bought them $3 per. He bought the remainder theters individually on the of three pens $2. How a lot did make in whole, {dollars}?nLets suppose step stepnSam purchased 1 bins x00 oflters.nHe purchased 12 * 300ters in totalnSam then took 5 bins 6ters0ters.nHe bought these bins for five *5nAfterelling these bins there have been 3030 highlighters remaining.nThese kind 330 / 3 = 110 teams of three pens.nHe bought every of those teams for $2 every, so made 110 * 2 = $220 from them.nIn whole, then, he earned $220 + $15 = $235.nSince his authentic price was $120, he earned $235 - $120 = $115 in revenue.nThe reply is 115',

# 'origin_tokens': 2365,

# 'compressed_tokens': 211,

# 'ratio': '11.2x',

# 'saving': ', Saving $0.1 in GPT-4.'}

## Or use the phi-2 mannequin,

llm_lingua = PromptCompressor("microsoft/phi-2")

## Or use the quantation mannequin, like TheBloke/Llama-2-7b-Chat-GPTQ, solely want

There at the moment are so many helpful immediate engineering sources broadly out there. That is however a small style of what’s on the market, simply ready to be explored. In bringing you this small pattern, I hope that you’ve got discovered at the very least one among these sources helpful.

Comfortable prompting!

Matthew Mayo (@mattmayo13) holds a Grasp’s diploma in pc science and a graduate diploma in knowledge mining. As Managing Editor, Matthew goals to make advanced knowledge science ideas accessible. His skilled pursuits embrace pure language processing, machine studying algorithms, and exploring rising AI. He’s pushed by a mission to democratize data within the knowledge science group. Matthew has been coding since he was 6 years outdated.